Let’s explore the patterns on how to reprocess messages in Kafka. When working with Kafka, it is important to handle failures and errors in a robust manner. Spring Boot provides a convenient way to handle these failures, reducing the need for writing boilerplate code. Even if you are not an expert in Spring Boot, you can still apply these principles to your programming environment. In this article, we will provide examples that can be used with any Kafka-compatible streaming data platform to reprocess messages in Kafka.

We can identify two types of error which possibly cause consumer order processing to fail:

Non-transient errors are errors that are not temporary and cannot be recovered automatically. There are two common causes for non-transient errors:

Malformed payloads or log messages:

For example, in a Spring Kafka consumer, you can configure the key and value serialisers and deserialisers. By using the error handling deserialiser, you can capture the failed message and route it to a dead letter topic for later recovery. This allows you to have access to the failed message and take appropriate actions to fix and reprocess it.

2. Validation errors in consumers are another cause of non-transient errors. After deserialisation, the message may fail to meet the business criteria or validation rules. For example, in an order processing system, there may be validation failures such as negative amounts or missing customer IDs. These validation errors should not be processed further and should be sent to a dead letter topic. By throwing a runtime exception and putting the message back to the dead letter topic, you can ensure that the invalid message is captured and can be fixed and reprocessed later.

To handle non-transient errors, it is important to add contextual information to the failed messages. This includes stack traces, the cause of failure, client information, and version details. By wrapping the failed message with this information, you can provide useful context for debugging and fixing the issue. Additionally, it is recommended to notify the producer team about the failure so that they can take responsibility for fixing and resending the message.

Transient errors are temporary errors that can be recovered automatically. These errors occur when there is a failure in an external dependency, such as a database or a service. To handle transient errors, the consumer should retry multiple times with fixed or incremental intervals in between. This allows the external dependency to come back online and process the message successfully. If the retries fail, the message can be moved to a dead letter topic for further analysis and recovery.

Kafka Consumer retry strategy:

There are different retry strategies that can be used when handling transient errors. One common approach is to configure the number of retry attempts and the delay period between each attempt. In Spring Boot, you can use annotations to configure these retry settings. For example, you can specify the number of retry attempts and the delay period in milliseconds.

Implementing retry mechanisms in production can be challenging, especially when dealing with high message processing throughput. There are different variations of retry patterns that can be used to handle transient errors effectively.

One simple way to retry is to block the consumer thread and retry multiple times. However, this approach is not ideal for high throughput scenarios as it blocks the main consumer thread and wastes computational resources.

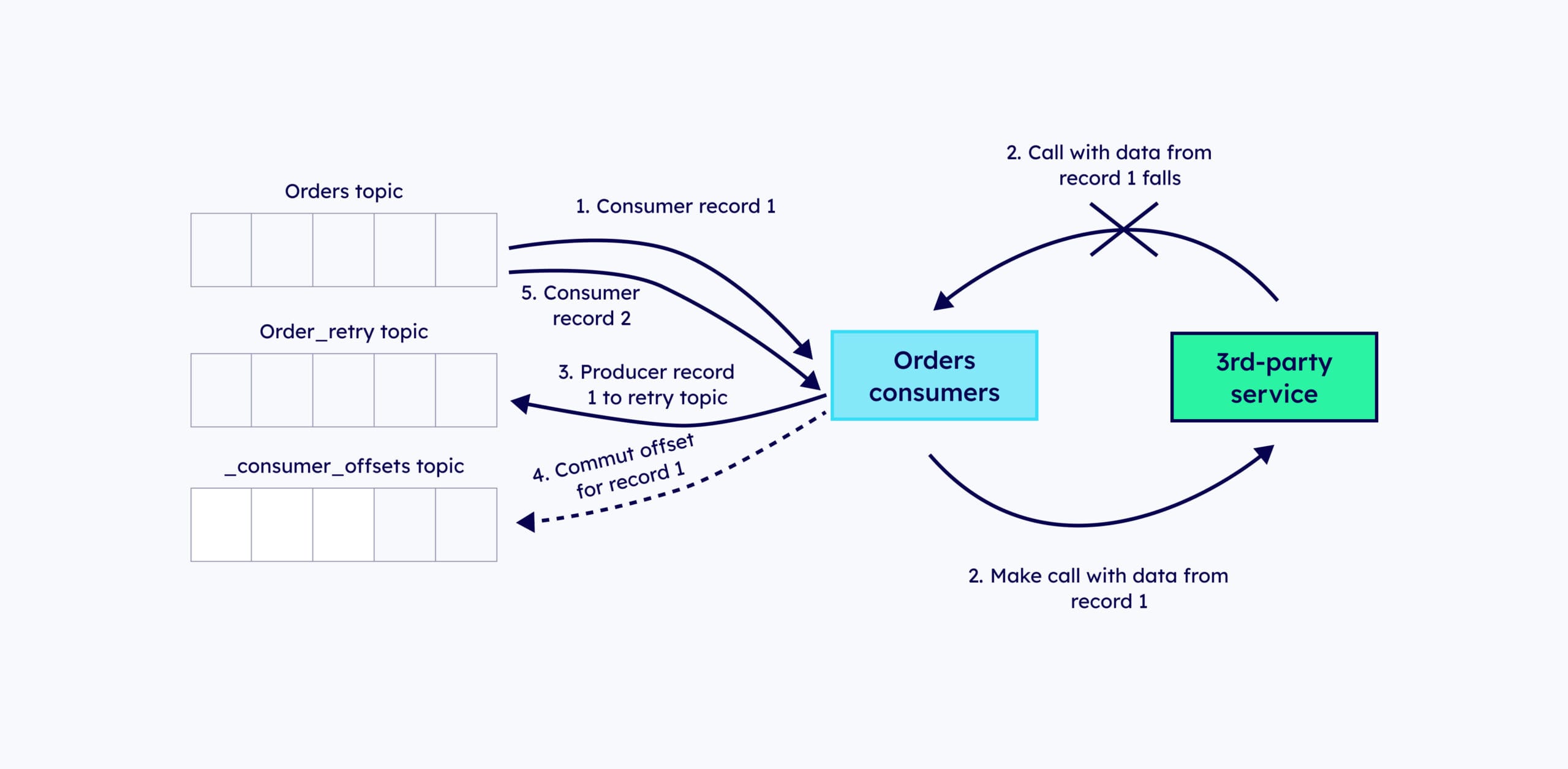

A non-blocking and asynchronous version of retry can be implemented by introducing a retry topic. When a transient error is identified, the message can be put back to the retry topic, isolating the main thread from the retry process. Other scheduling mechanisms can be used to retry the message from the retry topic. If all retry attempts fail, the message can be moved to the dead letter topic.

Kafka Consumer backoff strategy:

Another approach is to use exponential backoff, where the delay period increases with each retry attempt. This helps to prevent flooding the downstream component with retry attempts. With exponential backoff, you can specify a multiplier that determines the increase in delay period for each retry attempt.

In Spring Kafka, you can configure backoff settings using annotations. You can specify the number of retry attempts, the backoff delay, and the retry strategy. The retry strategy can be either fixed or exponential, depending on your requirements.

Another advanced non-blocking retry pattern is the leaky bucket pattern. This pattern involves using multiple retry topics, such as retry topics 0, 1, 2, and 3. The idea behind this pattern is to distribute the retry attempts across multiple topics to prevent flooding the downstream component with retry attempts. This helps to ensure that the downstream component is not overwhelmed with retry requests.

In conclusion, handling failures and errors in Kafka is crucial for building reliable and robust applications. By understanding the different types of errors and implementing appropriate retry mechanisms, you can ensure that your application can recover from failures and continue processing messages effectively. Spring Boot provides convenient tools and configurations to handle these scenarios, making it easier to build resilient Kafka applications.

Fore more content:

How to take your Kafka projects to the next level with a Confluent preferred partner

Event driven Architecture: A Simple Guide

Watch Our Kafka Summit Talk: Offering Kafka as a Service in Your Organisation

Have a conversation with a Kafka expert to discover how we help your adopt of Apache Kafka in your business.

Contact Us